Feb 2, 2026

I was catching up with my best friend recently; she works in compliance, and these days, she looks like she’s been reading the fine print of a revolution. When I asked her how the rollout was going, she didn’t talk about spreadsheets or fines. She talked about authenticity. “The era of the ‘ghost in the machine’ is over,” she told me. “We aren’t just auditing code anymore; we’re auditing the illusions we’ve spent a decade building.”

Her perspective sent me back to Pinocchio.

Specifically, that moment where Geppetto looks at his wooden creation and wishes, with every ounce of his soul, for it to become a “real boy.”

For the last decade, we — as product designers, engineers, and founders — have been Geppetto. We’ve been obsessed with the Turing Test, desperate to prove that our chatbots could be indistinguishable from human beings. We gave them names like “Sarah” and “Alex.” We taught them to use emojis, to mimic the rhythmic pauses of a person typing, and to feign empathy. We wanted the illusion to be seamless. We wanted the puppet to be real.

But as my friend’s current workload confirms: The EU AI Act has officially cut the strings.

As of early 2026, the masquerade is no longer legal. The law effectively mandates a “Reverse Pinocchio” effect: if you are a puppet, you must look like a puppet. Transparency has moved from an ethical flourish to a hard technical constraint.

In the original story, Pinocchio only becomes “real” when he develops a conscience. The EU AI Act is forcing that conscience into our codebases. It’s making us admit what our tools actually are: powerful, synthetic, and fundamentally distinct from human intelligence.

If you are shipping to Europe (or anywhere, because let’s be honest, this is the new global baseline), your Q1 2026 backlog needs to look different.

Here are the 10 UX changes you need to ship right now to stop “human-washing” your AI and start building compliant trust.

1. The “Non-Human” Badge (Article 50)

The Shift: From “Is this a person?” to “I know this is a system.”

The law prohibits systems that manipulate users into thinking they are interacting with a human.

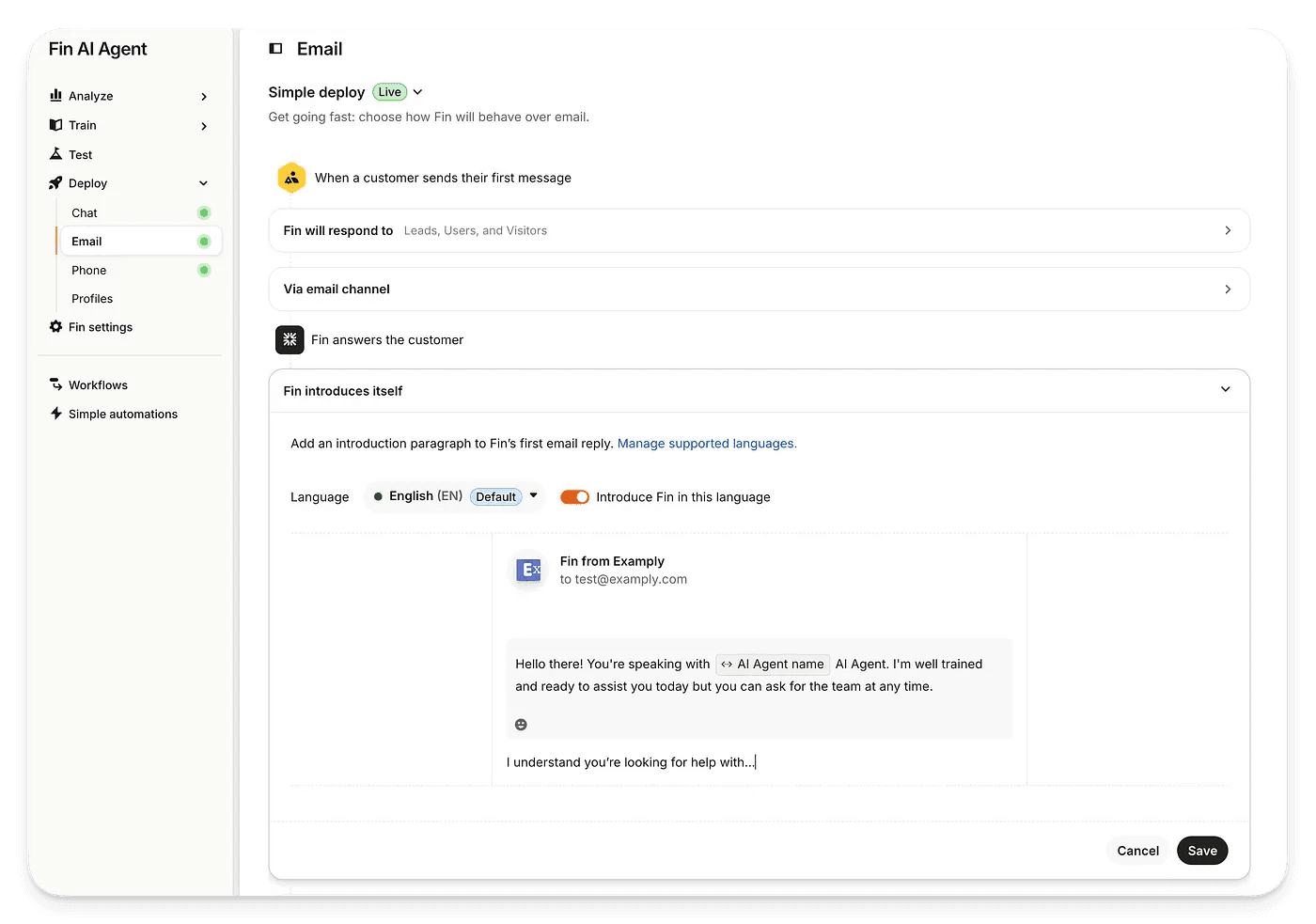

The Q1 Fix: Audit your chat headers. If it says “Chat with Support,” change it to “Chat with Automated Assistant.”

The Component:

Do: Use abstract avatars (sparkles, bots, geometric shapes).

Don’t: Use a stock photo of a smiling person for your LLM.

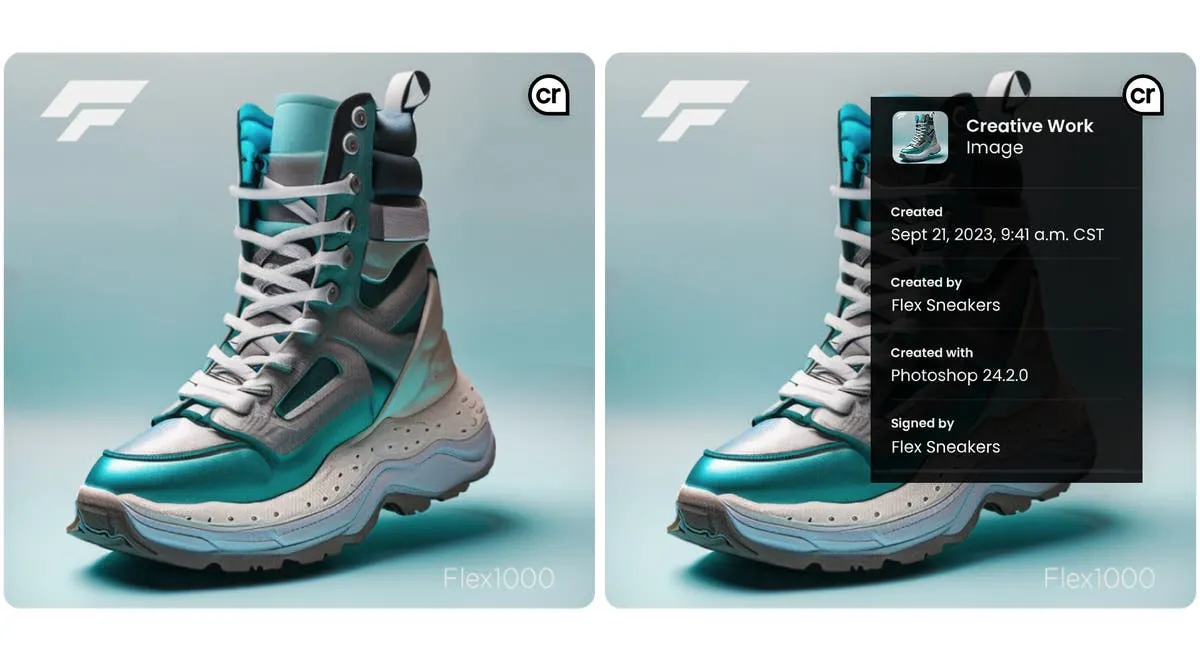

2. The C2PA “Synthetic” Label

The Shift: Invisible watermarking is not enough; users need visible proof.

Deepfakes are the primary target here. If your platform hosts or generates AI media, you need a standard visual indicator.

The Q1 Fix: Implement the “CR” (Content Credentials) icon or a “Generated by AI” pill on all synthetic media.

The Interaction: It must be persistent (not just on hover) and machine-readable.

Press enter or click to view image in full size

3. The “Why Am I Seeing This?” Modal

The Shift: “Black Box” < “Glass Box.”

For high-risk systems (hiring, lending, insurance), users have a “Right to Explanation.” You can’t just show a score; you have to show the math.

The Q1 Fix: Add a secondary button on decision screens: “View Decision Factors.”

The Visualization: Use a “Feature Importance” chart.

Example: “Your loan was denied based on: 1. Credit Utilization (High Impact), 2. Recent Inquiries (Medium Impact).”

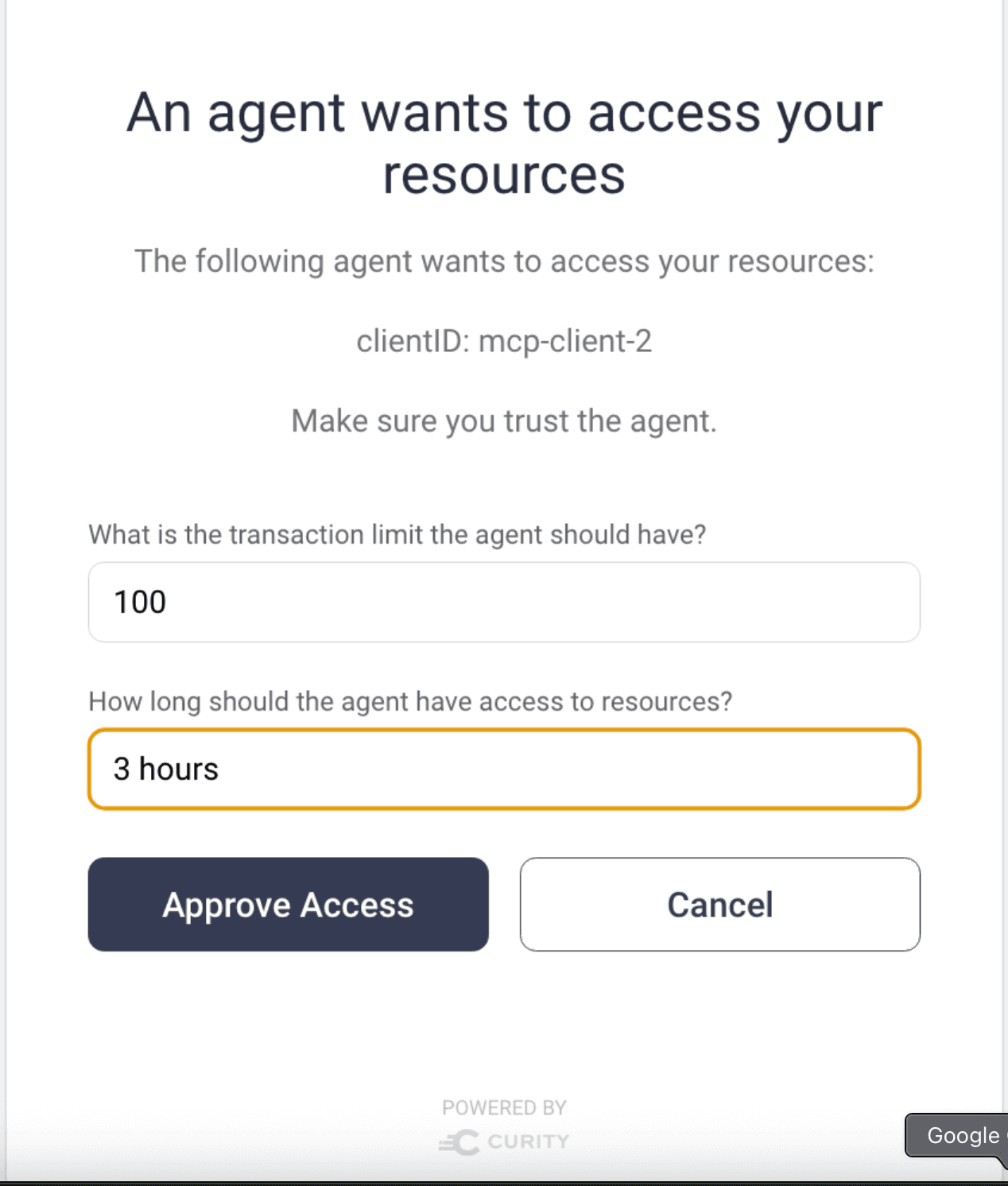

4. The “Human-in-the-Loop” (HITL) Dashboard

The Shift: Preventing “Automation Bias” in your internal tools.

The law requires human oversight for high-risk tools. But if the UX makes it too easy to click “Approve,” the human isn’t really loop — they’re just a rubber stamp.

The Q1 Fix: Add friction by design.

The Flow: Disable the “Approve” button on AI recommendations until the human operator has opened the source document or spent >5 seconds reviewing the data.

5. The “No Dark Patterns” Audit

The Shift: Removing manipulative nudges.

The Act bans AI techniques that deploy “subliminal techniques” to distort behavior.

The Q1 Fix: Kill the anxiety loops. If your AI sends notifications like “The system predicts you’ll fail if you don’t log in now,” remove them. That is now a liability.

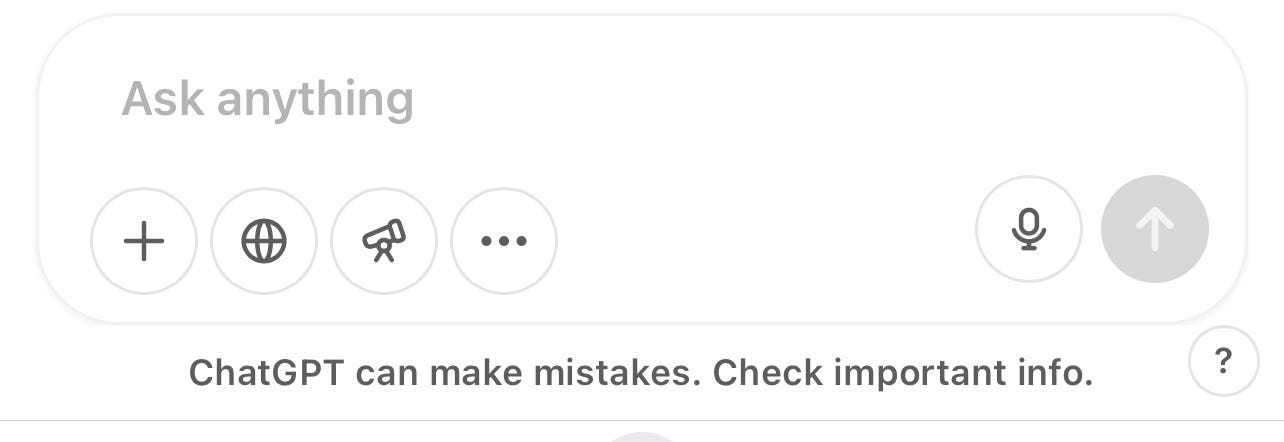

6. Reliability & Hallucination Disclaimers

The Shift: Managing expectations before the prompt.

The Q1 Fix: Move the disclaimer from the footer to the input field.

The Microcopy: Instead of “May produce inaccurate results,” try: “AI can make mistakes. Please verify important info.” This aligns user mental models with the technical reality of probabilistic models.

7. Emotion Recognition Consent

The Shift: Biometrics are not a playground.

If your app uses the camera to detect “mood” or “attention” (e.g., in ed-tech or proctoring), you need explicit, just-in-time consent.

The Q1 Fix: A pre-permission modal: “This session analyzes facial expressions to gauge engagement. This data is not stored. [Allow] [Deny].”

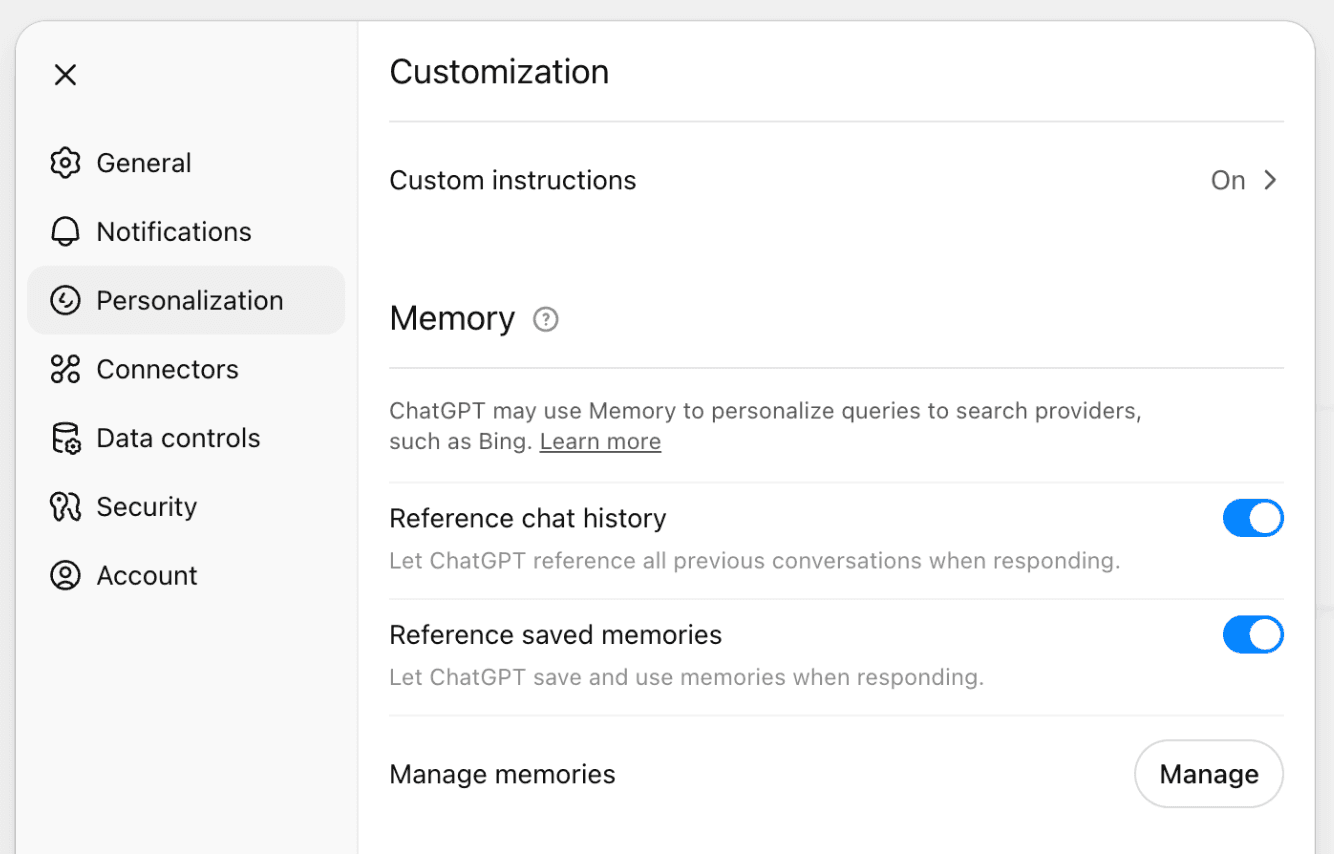

8. The “Edit My Memory” Control

The Shift: Data governance meets user agency.

Users need control over what the AI “knows” about them.

The Q1 Fix: Add a “Memory” tab in settings.

The Action: Allow users to view specific facts the AI has stored (e.g., “User lives in Berlin”) and delete them individually without wiping the whole chat history.

9. Interactive “Instructions for Use”

The Shift: Compliance docs > Onboarding flows.

High-risk systems require “instructions for use.” Do not bury this in a PDF.

The Q1 Fix: Build a “Wizard” style onboarding overlay.

Step 1: What this AI does.

Step 2: What it explicitly cannot do.

Step 3: How to interpret the output.

10. The Redress Flow

The Shift: You need a “Complaint” button for the AI specifically.

The Q1 Fix: In your support center, add a specific category: “Challenge AI Decision.”

The Routing: Ensure this ticket goes to a human agent, not another bot.

The strategic takeaway

Compliance is often viewed as a burden, but in the age of AI hallucinations and deepfakes, transparency is your competitive advantage.

Implementing these changes does more than keep you out of court; it tells your users: “You are in control. We are not hiding how the sausage is made.”

Update your Design System. Audit your flows. Make the “Artificial” in Artificial Intelligence visible.